What is DAG in Apache Airflow

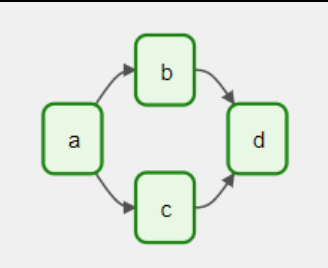

DAG=Directed Acyclic Graph, is a collection of all the tasks you want to run, organized in a way that reflects their relationships and dependencies. Please note it is not possible to reach the same task again hence, the Acyclic nature of these workflows (or DAGs)

Below is a very simple example given

OR

Howto useDAG in Apache Airflow?

DAGs are defined using Python code in Airflow. Example given below

from airflow import DAG

from datetime import datetime, timedelta

default_args = {

"owner":"airflow",

"email_on_failure":False,

"email_on_retry":False,

"email":"example@gmail.com",

"retries":1,

"retry_delay":timedelta(minutes=5)

}

with DAG("forex_data_pipeline",start_date=datetime(2023,11,01),schedule_interval="@daily",

default_args=default_args,catchup=False) as dag:

Code Above defines an Apache Airflow DAG (Directed Acyclic Graph) for a data pipeline related to forex data

- First we are importing the required DAG,FileSensor and HttpSensor

- After that we have default_args dictionary over there we are specifying

“owner”:”airflow”, (Owner can be anything. It is used to classify your DAGs in Airflow)

“email_on_failure”:False, (Do we want to send the Email if task fail)

“email_on_retry”:False, (Do we want to send the Email if task retry)

“email”:”admin@airflow.com”, (if any task within the DAG fails or is retried, Airflow will send email notifications to the specified email address. Please Note in order to receive email we have to setup SMTP)

“retries”:1, (how many time task need to retry in this it will retry at least once before failure)

“retry_delay”:timedelta(minutes=5) (Determines the amount of time to wait before retrying a failed task) - Now we initializes a DAG object named “forex_data_pipeline”The first argument is the DAG’s identifier and start_date=datetime(2023, 11, 01)the start date for the DAG we have to set these two parameter else we will get error.

- schedule_interval as we can guess defines the frequency at which the DAG should run

- default_args provide theconfiguration parameters for the DAG please note this will be applied to tasks

- catchup=FalseDAG should not attempt to run any tasks for the time periods between the start date and the current date when the DAG is triggered

We at Helical are expert of Modern Data stack including Airflow and provide extensive consulting, services and maintenance support on top of Airflow. Please reach out to us on nikhilesh@Helicaltech.com to learn more about our services.

Create DAG documentation in Apache Airflow Create your first Airflow DAG Create your first DAG in 5 minutes How do I import DAGs into Airflow? How do you deploy DAG in Airflow? How do you run DAG in Airflow? How to Create First DAG in Airflow? How to use DAG in Apache Airflow Introduction to Airflow DAGs What is DAG in Apache Airflow