Let’s understand how we can fetch the log of a task using the API in Apache airflow, let’s see how we can achieve this.

To locate the logs of a task, use the following

https://airflow.apache.org/api/v1/dags/{dag_id}/dagRuns/{dag_run_id}/taskInstances/{task_id}/logs/{task_try_number}

Now Let’s a simple program to fetch the log using the API please visit

https://airflow.apache.org/docs/apache-airflow/stable/stable-rest-api-ref.html#operation/get_log for more details

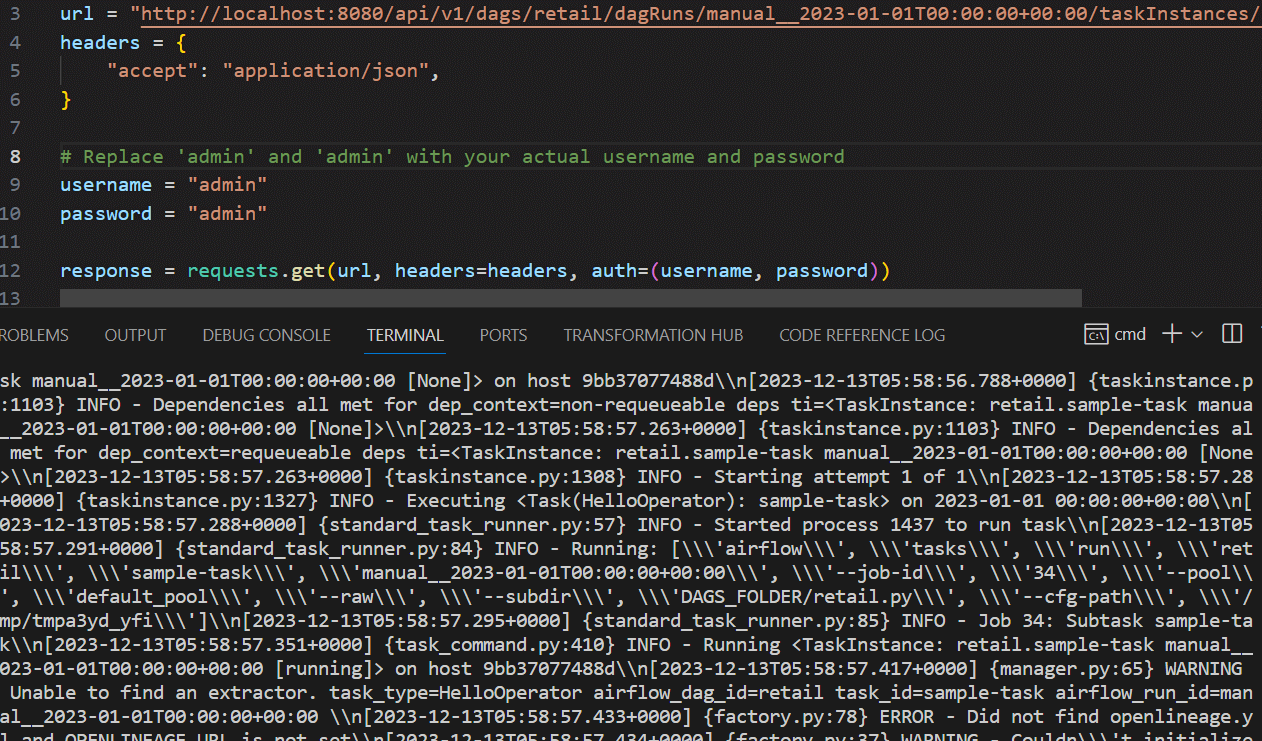

import requests

url = "http://localhost:8080/api/v1/dags/retail/dagRuns/manual__2023-01-01T00:00:00+00:00/taskInstances/sample-task/logs/1"

headers = {

"accept": "application/json",

}

# Replace 'admin' and 'admin' with your actual username and password

username = "admin"

password = "admin"

response = requests.get(url, headers=headers, auth=(username, password))

if response.status_code == 200:

data = response.json()

print("Data received:")

print(data)

else:

print(f"Failed to retrieve data. Status code: {response.status_code}")

print(response.text)

- To fetch the logs, the DAG ID, DAG run ID, task ID, and task try number are required.

- Authentication is necessary, and the username and password must be provided.

- Obtain the required details from the Airflow UI or use the following curl command curl -X GET “http://localhost:8080/api/v1/dags” -H “accept: application/json” –user “admin:admin”.

- This command provides information about the DAGs, which can be used to retrieve DAG run IDs and other detailss.

- Once all the necessary details are gathered, execute the Python script to check the data.

We at Helical have more than 10 years of experience in providing solutions and services in the domain of data and have served more than 85+ clients. Please reach out to us for assistance, consulting, services, maintenance as well as POC and to hear about our past experience on Airflow. Please do reach out on nikhilesh@Helicaltech.com

Thank You

Abhishek Mishra

Helical IT Solutions