DATA VAULT MODELING

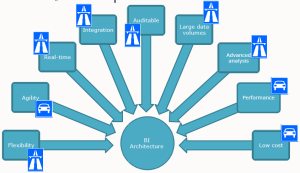

Data Vault Modeling is a database modeling method that is designed to provide long-term historical storage of data coming in from multiple operational systems. It is also a method of looking at historical data that, apart from the modeling aspect, deals with issues such as auditing, tracing of data, loading speed and resilience to change.

Data Vault Modeling focuses on several things:-

First, it emphasizes the need to trace of where all the data in the database came from. This means that every row in a Data Vault must be accompanied by record source and load date attributes, enabling an auditor to trace values back to the source.

Second, it makes no distinction between good and bad data (“bad” meaning not conforming to business rules) This is summarized in the statement that a Data Vault stores “a single version of the facts” as opposed to the practice in other data warehouse methods of storing “a single version of the truth” where data that does not conform to the definitions is removed or “cleansed”.

Third, the modeling method is designed to be resilient to change in the business environment where the data being stored is coming from, by explicitly separating structural information from descriptive attributes.

Finally, Data Vault is designed to enable parallel loading as much as possible, so that very large implementations can scale out without the need for major redesign.

Data Vault’s philosophy is that all data is relevant data, even if it is not in line with established definitions and business rules. If data is not conforming to these definitions and rules then that is a problem for the business, not the data warehouse. The determination of data being “wrong” is an interpretation of the data that stems from a particular point of view that may not be valid for everyone or at every point in time. Therefore the Data Vault must capture all data and only when reporting or extracting data from the Data Vault is the data being interpreted.

BASIC NOTIONS

Data Vault attempts to solve the problem of dealing with change in the environment by separating the business keys (that do not mutate as often, because they uniquely identify a business entity) and the associations between those business keys, from the descriptive attributes of those keys.

The business keys and their associations are structural attributes, forming the skeleton of the data model. The Data Vault method has as one of its main axioms that real business keys only change when the business changes and are therefore the most stable elements from which to derive the structure of a historical database. If you use these keys as the backbone of a Data Warehouse, you can organize the rest of the data around them. This means that choosing the correct keys for the Hubs is of prime importance for the stability of your model. The keys are stored in tables with a few constraints on the structure. These key-tables are called Hubs.

DATA VAULT AND DIMENSIONAL MODELLING

The Data Vault modelled layer is normally used to store data. It is not optimized for query performance, nor is it easy to query by the well-known query-tools such as Cognos, SAP Business Objects, Pentaho et al. Since these end-user computing tools expect or prefer their data to be contained in a dimensional model, a conversion is usually necessary.

For performance reasons the dimensional model will usually be implemented in relational tables, after approval.

Note that while it is relatively straightforward to move data from a Data Vault model to a (cleansed) dimensional model, the reverse is not as easy.

Refer for more Detail: http://www.slideshare.net/dlinstedt

PUSHPRAJ (BI-Developer)

Best Open Source Business Intelligence Software Helical Insight is Here

You shouldn’t need to take data from a dimensional model to a Data Vault – that’s a waste of time anyway.

You are however, better off building a Data Vault first, and use that as a source for your dimensional model.

There are Data Warehouse Automation tools like BIReady now that design and build Data Vault from the MODEL of your source(s) (which BIReady can reverse-engineer) and then automatically builds your dimensional model(s) using a simple wizard process.

Thanks, Ian .. for sharing your opinion …