Audit Logs in Pentaho Data Integration

Audit Logs at Job level and Transformation Level are very useful for ETL projects to track the details regarding Job name, Start Date, End Date, Transformation Name, Error,Number of Lines Read, Number of Line Write, Number of lines from Input, Number of Lines in output etc.

Make data easy with Helical Insight.

Helical Insight is the world’s best open source business intelligence tool.

I have created a simple PDI Job to track audit logs at Job level, Job entry level and Transformation Level in Mysql database. Please find the process as follows:

Created a Kettle Job FileToDB.kjb which contains two job entries (Start and Transformation) and one database connection(PSQL_Log). I have created a separate database ‘PDI_Audit_Logs’ in Mysql DB. I configured this DB details as database connection.

Right click on the work area and select Job settings as shown in below screen shot.

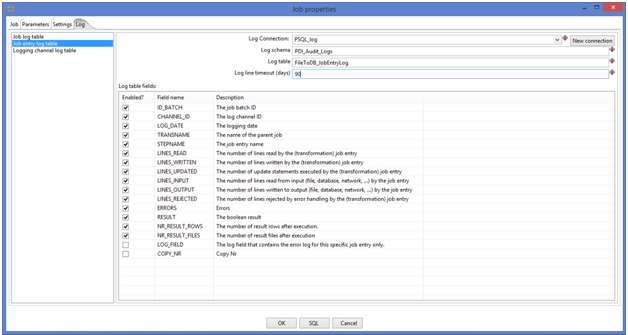

It will pop up a Job Properties window. Click on Log tab. Select ‘Job entry log table’ option, it will display the variable names under this section. You can enable all the variable are if you consider default variables is also fine. Now configure the log table connection as shown in below screen shot:

Log Connection–> We have already created this connection.(In this case MYSQL DB)

Log Schema–>Existed Database Name

**Log Table–> Table Name (in which you want to store the tracked details). It can be existed table name. We will see the process for new table in the later steps.

Log line timeout–>Number of days that you want to store the log details.

Make data easy with Helical Insight.

Helical Insight is the world’s best open source business intelligence tool.

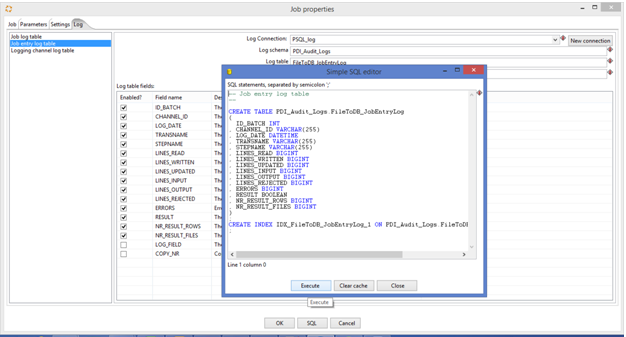

**If you are creating the log table first time you have to click on SQL button which is at the below otherwise click on Ok button. So SQL button will pop up SQL statement to create this table with the above shown column names as . I gave log table name as “FileToDB_JobEntryLog” My job name and level of logging.

click on Execute button, it will create that table in the given schema/database. And will prompt below window. click on OK and then click on close of SQL editor.

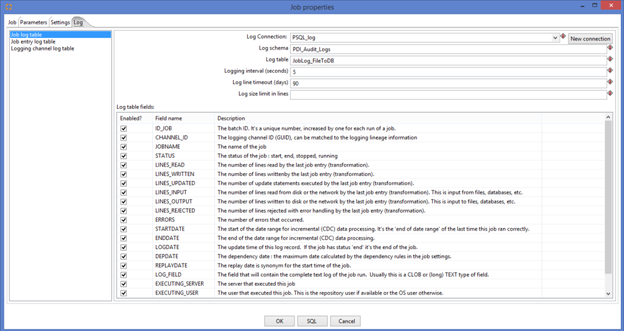

So Job Entry level Log configuration is done. Now select Job Log table section to configure the log connection for Job level. Configure the values as shown in below Screen shot.

And for Log Table Name, same steps have to follow as explained above .

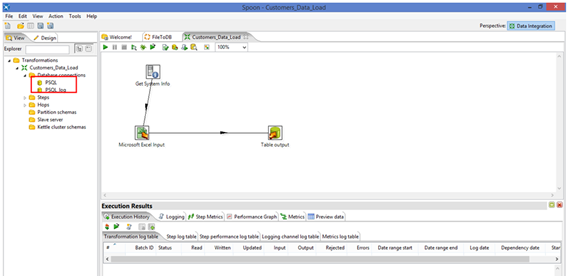

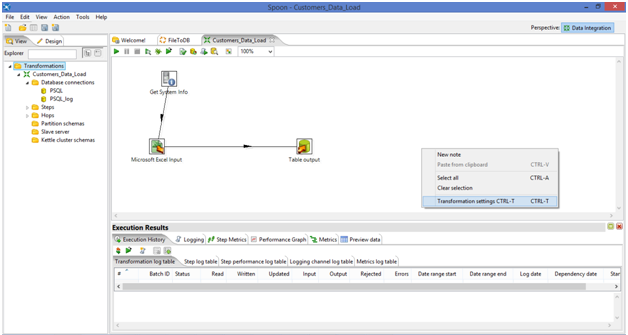

Now Job Audit Logs settings are done. Open the transformation. it has system info, file input and DB output steps connected with Hops. And the DB output schema is different and Audit Logs schema is different So we have created two connection PSQL and PSQL_Log.

Right click on the work area and select Transformation Settings.

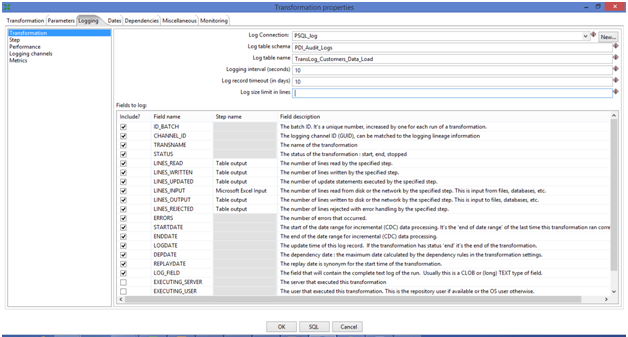

It will pop up Transformation Properties window. Select Logging tab.And then click on Transformation option. It will display the variables that can be tracked from this option.

For the fields LINES_READ,LINES_WRITTEN,LINES_UPDATED,LINES_INPUT,LINES_OUTPUT,LINES_REJECTED should be given a step names that it should track from which step accordingly.

Same like Job settings here also you have to configure Log connections and click on SQL button if it is first time to create the Log Table Name otherwise click on OK.

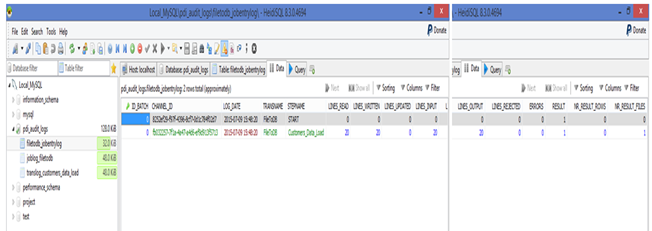

Now Audit Logs created for transformation also. So execute the Job. And check in the database for these Log tables. It will create 3 tables (job Entry level, job level, Transformation level ) under the given schema.

Make data easy with Helical Insight.

Helical Insight is the world’s best open source business intelligence tool.

Job Entry Level Table:

As there are two Job entries it generated the details for both the job entries

Job Level Log Table:

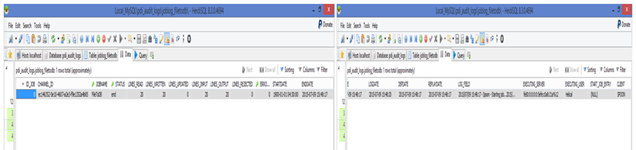

Transformation Level Log Table:

Hope this topic will be useful.

Thank you.

Best Open Source Business Intelligence Software Helical Insight is Here