What is Apache Airflow?

Apache Airflow is an open-source platform for developing, scheduling, and monitoring batch-oriented workflows. Airflow’s extensible Python framework enables you to build workflows connecting with virtually any technology. Apache airflowwas created at Airbnband since then it has evolved into a robust tool.It has web interface which help you manage the state of your workflow.

Howto use Apache Airflow?

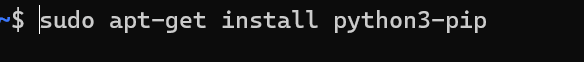

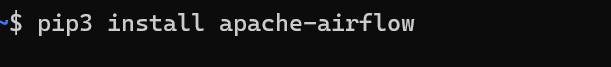

In order to use the Apache Airflow we need to install airflow. For Airflow installation you should have Python and pipinstalled.

- We can install the pip first

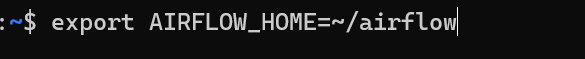

- We need to set the path

- Now we can install the airflow using PIP

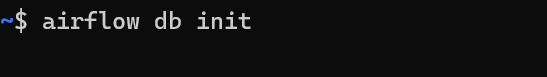

- Now we need to initialize the airflow database we can use following command for that

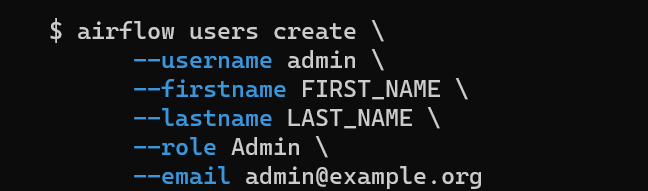

- Now we can create user using following command to create the user

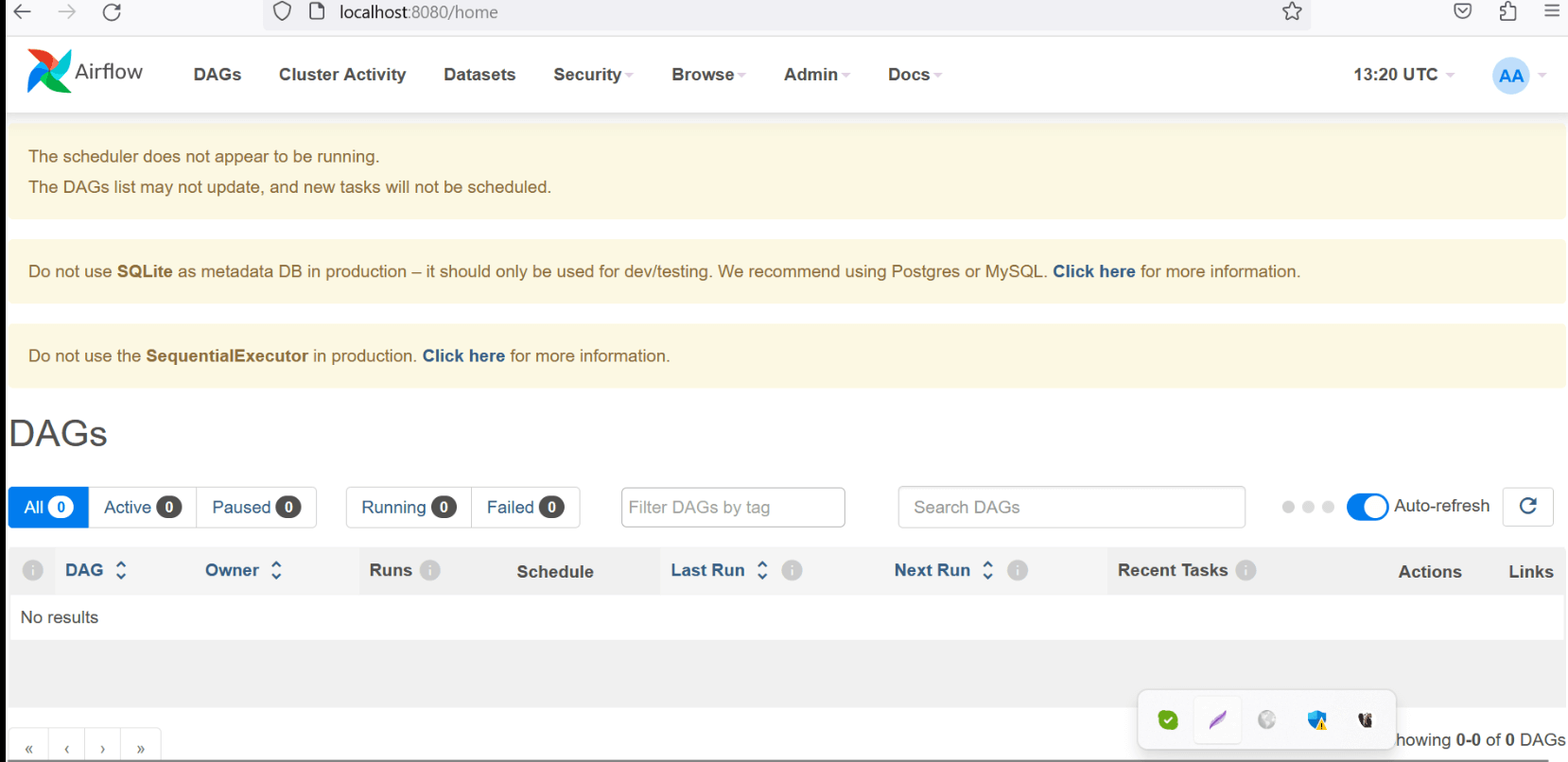

- Now we can run airflow

airflow webserver

Now let’s check our first example

from datetime import datetime, timedelta from airflow import DAG fromairflow.operators.bash_operatorimportBashOperator default_args = { 'owner': 'airflow', 'retries': 1, 'retry_delay': timedelta(minutes=5), } with DAG(dag_id="test", start_date=datetime(2023, 11, 01),schedule_interval="@daily", default_args=default_args) as dag: hello = BashOperator(task_id="hello", bash_command="echo hello") @task() def airflow(): print("World") hello >>airflow()

Now let’s understand our example

- First we imports necessary modules and classes for working with Apache Airflow

- default_argswhich contains default parameters for the DAG. These parameters include the owner of the DAG (airflow), the number of retries for each task (1), and the delay between retries (5 minutes)

- After that we initializes a new Airflow DAG with id test, schedule_interval and default_args

- After that we creates a BashOperator task with the task ID “hello” which will print hello

- After that we have @task()decorator the function print world

- After that we have Task Dependency

- hello >>airflow()

We at Helical are expert of Modern Data stack including Airflow and provide extensive consulting, services and maintenance support on top of Airflow. Please reach out to us on nikhilesh@Helicaltech.com to learn more about our services.

A Complete Introduction to Apache Airflow Apache Airflow for Beginners How do you run Airflow? How is Apache Airflow used? How to Use Apache Airflow How to Use Apache Airflow to Schedule and Manage Is Apache Airflow easy to use?