Databricks consulting

Helical IT Solutions stands out as a premier provider of Databricks consulting services, offering unparalleled expertise to businesses seeking optimal data solutions. With a dedicated team of seasoned professionals, they specialize in guiding clients through the intricacies of Databricks implementation, optimization, and ongoing support. From data engineering to machine learning, Helical IT Solutions ensures that organizations harness the full potential of Databricks, delivering tailored solutions that elevate data analytics capabilities and drive strategic decision-making.

Choose Helical IT Solutions for transformative Databricks consulting services that pave the way for sustainable growth and innovation.

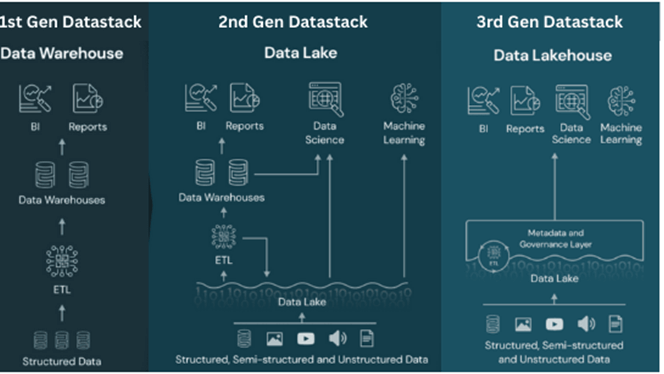

What is Databricks Lakehouse?

Databricks company was behind creation of tools like Apache Spark, MLFlow and Delta Lake. Databricks platform is built on top of Apache Spark. Databricks is a cloud-based platform that unifies data, analytics, and AI. It allows you to build, deploy, and manage data engineering, data science, machine learning, and business analytics applications.Databricks also integrates with various cloud services and technologies, such as AWS, Azure, GCP etc.

Usage of Databricks can help you in adoption and migration to Modern Data Stack.