Introduction

In this blog we are going to cover how to implement Data Lake on Azure.

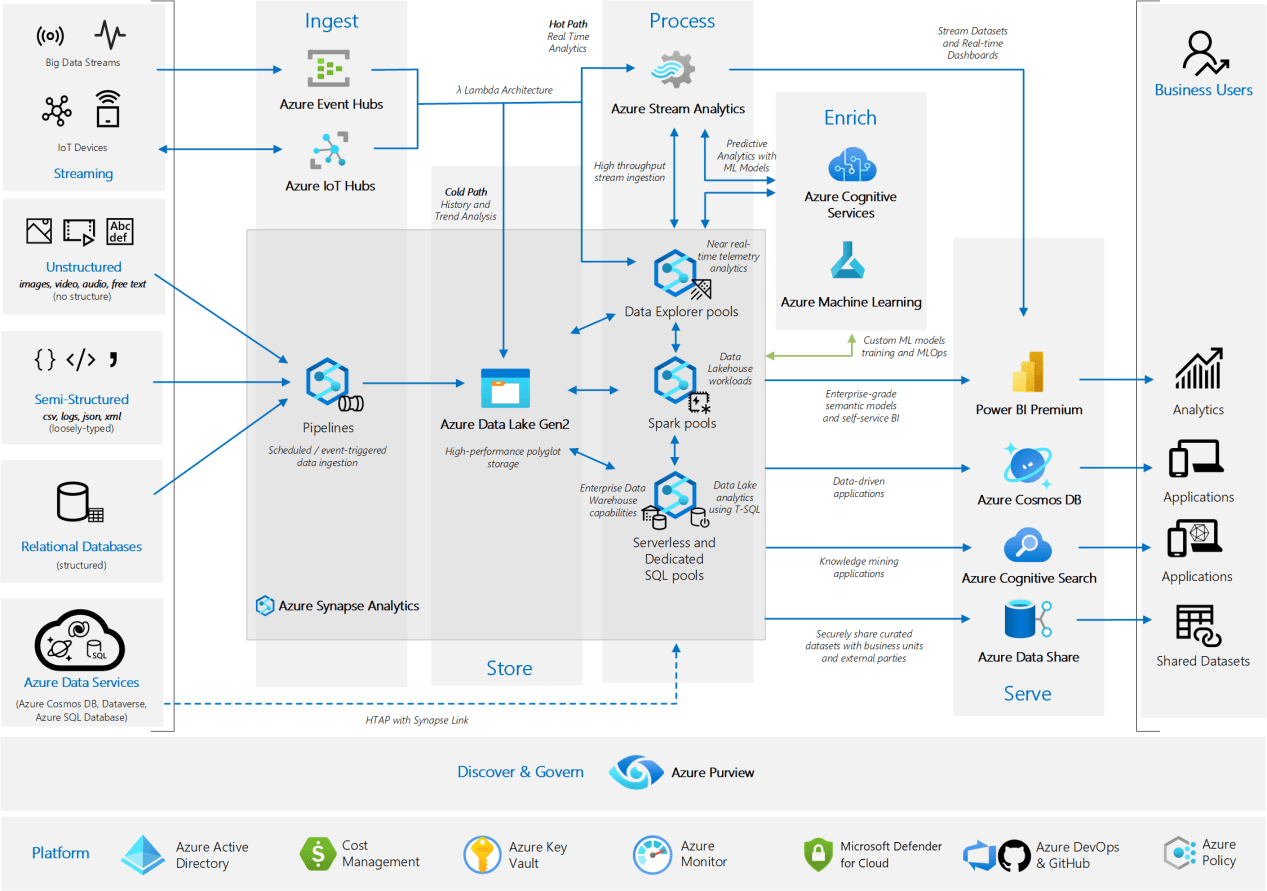

Azure cloud services enable us to build modern datalake platform that can store petabytes of structured and unstructured data , data ingestion and data processing pipelines to ingest, clean, transform the data as well as provides tools to query/analyze the data. Depending on the nature of the data, size, analysis requirements, we can use the various analytics and data services to build our data analytics architecture. Below is a sample architecture that gives an idea of the various layers / components that a typical data lake architecture would comprise of.

Azure Data Lake Architecture

This diagram shows a layered architecture which comprises of 6 Layers –

Ingest – This layer is responsible for ingesting data into the various data storage targets which form the data lake, typically called the raw zone. The storages used could be object store which stores raw files or databases. Ingestion layer has the capability to connect to diverse data sources and can ingest batch or streaming data into the lake. Ingestion Pipelines can be triggered based on a pre-defined schedule, in response to an event, or can be explicitly called via REST APIs.

Store – This layer is responsible for storing the raw data(raw zone) as well as processed data (curated zone). It should be scalable as well as cost effective to allow us to store vast quantities of data. It also has security, high availability, data archival and backup capabilities.

Process and Enrich – This layer validates, transforms, and moves your datasets into your Curated zone in your data lake. These layer has the data processing pipelines and the means to orchestrate the data flow. It has mechanisms for auditing , reconciliation of data at various stages in the pipeline. At this stage Machine Learning Models can also be invoked to enrich your datasets and generate further business insights.

Serve – This is the layer which provides tools that support analysis methods, including SQL, batch analytics, BI dashboards, reporting, and ML.

Security, Monitoring – This layer ensures security to the various components of all layers in the data analytics architecture. It has means for authentication, authorization, encryption, monitoring, logging, alerts.

Discover and Govern – This layer registers all your datasources , organizes them and allows auto-discovery of new datasets, cataloging and metadata updation. It stores data classification, data sensitivity, data lineage information. It can help us maintain a business glossary with the specific business terminology required for users to understand the semantics of what datasets mean and how they are meant to be used across the organization.

Are you seeking Data Lake consulting services?

Register here and enjoy two days of complimentary expert consultation.

Unlock the potential of your data with our guidance.

Join us today to supercharge your data strategy. Don't miss this exclusive offer – register now for your free two-day consultation!

Azure Services Used

Below are some of the services used by the above architecture in its various layers as can be seen in the diagram.

Azure Event Hubs – Fully managed, real-time data ingestion service which can stream millions of events per second from any source to build dynamic data pipelines. It enables us to automatically capture the streaming data in Event Hubs in an Azure Blob storage or Azure Data Lake Storage Gen 1 or Gen 2.

Azure Data Lake Storage Gen2 – Azure Data Lake Storage Gen2 builds on Azure Blob storage and enhances on performance, management, and security aspects. Especially thehierarchical namespace significantly improves the overall performance of many analytics jobs. This storage layer service is scalable and cost effective as well.

Azure Synapse Analytics – End to End analytics service that brings together data integration, enterprise data warehousing, and big data analytics. Support both data lake and data warehouse use cases. It is a combination of multiple services some of which are Azure Synapse Pipelines (can be used for data ingestion/ processing), Data explorer pools (for near realtime analytics), Spark pools (for data processing workloads), Serverless and Dedicated SQL pools (for creating enterprise data warehouse, running t-sql queries)

Azure Data Factory – Though not shown in the sample architecture, data factory can also be used to build scalable data ingestion and data processing pipelines.

Azure Stream Analytics – For real-time insights, use a Stream Analytics job to implement the “Hot Path” of the Lambda architecture pattern and derive insights from the stream data in transit. Easily enable scenarios like low-latency dashboarding, streaming ETL, and real-time alerting.

Azure Cognitives Services – This offers a vast set of services which can be used for data enrichment / data quality like entity recognition, sentiment analysis, identify and analyze content in images or videos, content moderator, anamoly detection. It can also be used in your serve layer to improve user experience by using features like personalizer, text to speech, speech to text, question-answering etc

Azure Machine Learning – As part of your data enrichment process we can create and use custom ML models from Azure ML, in addition to pre-defined models available in Azure cognitive services.

Azure Data Share – Data can also be securely shared to other business units or external trusted partners using Azure Data Share. We can control what is shared, who receives the data, and the terms of use.

Power BI Premium – We can load relevant data from the data lake into Power BI datasets for data visualization and exploration. Power BI models implement a semantic model to simplify the analysis of business data and relationships. Business analysts use Power BI reports and dashboards to analyze data and derive business insights.

Azure Purview – Can be used for data governance – discovery and insights on your data assets, data classification, and sensitivity, which covers the entire organizational data landscape.

Azure Monitor – Can be used for monitoring of the entire data platform. Monitor performance, usage as well as availability of various stages and also provides alerting and auto-scaling capabilities in order to respond to the various problems.

Azure Active Directory – is an enterprise identity service that provides single sign-on, multifactor authentication, and conditional access to data in our data lake.

If you are looking for Data Lake implementation on top of Azure (or other vendors) please reach out to us. We can show you some of our past work on Data Lake implementation, explain the pros and cons of various architecture (from technology as well as costing point of view) as well as help you with the implementation as well.

Reach out to us on nikhilesh@helicaltech.com for more information.

Azure Data Lake Analytics Documentation Can we do analytics with data lake How do I access data lake in Azure how to implement Data Lake Analytics on Azure Manage Azure Data Lake Analytics by using the Azure portal What is Azure Data Lake analytics